The Attention Brokers

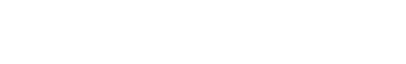

In 1997, a Computerworld column called out a particular stain of internet boosterism. “One of the most recent forecasts for the Web is that it will let companies sell their products directly, bypassing the traditional need for channel support,” David Moschella wrote. “Frequently cited examples include online booksellers, stock traders and travel agencies. Those certainly are interesting examples and powerful examples of the Web’s potential,” he says, “but even a cursory analysis reveals that those innovations have almost nothing to do with disintermediation.”

Internet commerce had arrived, as the prophets foretold! Except it wasn’t Random House or Doubleday selling books, or the authors themselves. It was Amazon. Airlines were selling tickets directly but middleman sites like Expedia, which aggregated tickets into one place, were clearly the future. “At this stage,” he concluded, “we should recognize that competition on the web is mostly about the battle between channels, not their elimination.” Just because two people anywhere in the world can now share information with each other instantly doesn’t mean there isn’t plenty of space in between.

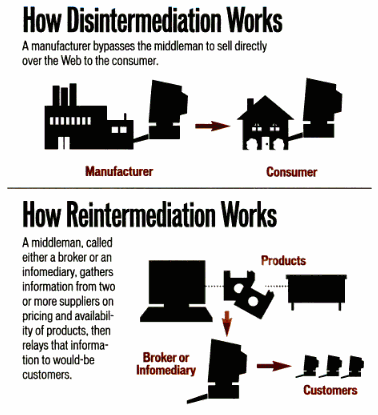

Two years later, in the same magazine, this process — of internet companies destroying middlemen only to eventually become them — was described with another term borrowed from the world of finance:

In 1998, Jack Shafer, then deputy editor of “the on-line magazine” Slate, imagined the media’s odd and accelerated version of this cycle:

Already, electronic commerce mavens are talking about ‘reintermediation,’ in which a new breed of Web middleman will rise to make sense out of the chaos wrought by disintermediation. As the technology of the Web evolves, Internet devices will become as ubiquitous as telephones. Every newspaper will have the potential to break news as fast as a television station. Every television station will have the potential to become a newspaper.

This was a column about the Drudge Report, but replace a few proper nouns and it could almost be a piece about blogging a few years later, or about citizen journalism a few years after that, or about Twitter a few years after that. This framework is useful for describing much bigger things that happened elsewhere online, too. From big web portals to search engines to social media, over the last twenty years the web has been passed, in pieces, between middlemen with either the biggest audiences or a more efficient — or convenient, or useful — form of intermediation. What seems to have changed, mostly, is how fast the hand-offs happen, and how ambitious the middlemen are.

The marginalization of web publishers has been swift. This week seems to be a milestone, at least psychologically, as Facebook, which routes an enormous proportion of the world’s mobile web traffic, prepares to assume the role of funder, distributor and host for news. The Wall Street Journal, writing about Facebook’s plan to host news organizations’ articles (in addition to videos, which are already hosted there) on its own site and app, did so from a notably compliant perspective.

Facebook Inc. is offering to let publishers keep all the revenue from certain advertisements, in a bid to persuade them to distribute content through the social network, according to people familiar with the matter.

Many publishers now post links to their content on Facebook, which has become an important source of online traffic for news sites. But opening those links on a mobile device can be slow and frustrating, taking around eight seconds.

The Facebook initiative, dubbed Instant Articles, is aimed at speeding that process, people familiar with the matter said.

Business Insider laid out the broader situation:

Traditionally, media companies have operated independently and controlled their own destinies. They owned the whole content supply chain, from research to writing to publication to distribution. In the digital era, they built their own web sites, which drew loyal readers (direct traffic), and they sold most of the ads that ran on their sites, keeping 100% of the revenue.

Those days are gone.

Now the fate of publishers increasingly depends on social platforms like Facebook, where billions of people discover news to read and videos to watch. And the social platforms are equally interested in the media business.

“Facebook’s plan,” the article continues, is to “own articles, not media companies.” (All such writing is slightly tense and cautious, sort of like when the Times reports on itself. How are most people going to come to this article about Facebook? Where, a year from now, might they be reading it?)

Facebook’s is a reintermediation by default: the service is such a dominant source of readers — much more so than any other individual website — that it can characterize eight-second load times as a legitimate reason for publishers to grant hosting and distribution of their product to Facebook. From the publisher’s perspective, Facebook went from non-entity to link-sharing site to meaningful source of traffic to one too large not to accommodate, to finally becoming an existential threat. From Facebook’s perspective, this story ran a little less dramatically: it allowed users to add links alongside status updates and photos of friends; it allowed them to be shared, like anything else on the site; it found that these links — which were, at the time, the best way to give Facebook users a way to reference the world outside Facebook — made people use Facebook more. So why should they remain links?

This dissonance explains a lot of the panic welling up across online media right now: To publishers, perhaps blinded by the enormous traffic from Facebook they had come to depend on, and which inflated them to unimagined sizes, this is seen as a bait-and-switch; to Facebook, keeping people on their site and in their app, rather than sending them away multiple times a day, is an obvious product choice — as obvious, to them, as saying an embedded photo is better than a link to a photo, or that an embedded video is better than a link to a video embedded elsewhere. If sharing is taken as approval, Facebook users would probably agree: It’s not Facebook standing between websites and their readers, it’s websites standing between the things they’re trying to read and the feed they want to read them on.

You only have to look back to the middleman service Facebook supplanted to find precedent for this type of argument. From an interview with the creator of Google News, back in 2007:

I think the newspapers should understand and recognise the benefits of what we have done both for them and for the readers. What Google has always tried to do is to make information accessible and useful. We view ourselves as credible, trusted, conscientious intermediary bringing people, who come to us for information, to the right source. We don’t want to serve or own the content but want to direct readers to where the news lives which is various websites of newspapers. We also want to make the whole process very efficient.

It’s extremely unfriendly for me to go to one website for news and then go back and track other sites to get the anti- or a different perspective of the same event, at any given point in time. No reader would bother to do that.

Whether or not Google is (or was) a “conscientious intermediary” is still up for somewhat low-stakes debate. Google News was eventually shut down in Spain after the introduction of rules requiring the company to pay publishers for their content. Now, in an apparent effort to atone (and deflect European antitrust action), the company is gifting over one-hundred and fifty million dollars to European companies awarding “’risk-taking’ in digital journalism.”

This was a comparatively small and specific fight now extending past relevance. The manner in which Google News selected and ranked stories was a constant source of stress for publishers because the site sent significant traffic (I remember, at an old job, the frustration of watching foreign syndications of my stories rank above the originals). Perhaps if Google News kept its influence for a few years longer, and kept growing, it would be asking publishers to host their content for an advertising share (it has, for example, hosted wire stories from services without websites). Maybe it would be making a similar argument: Google News is where everyone gets their stories on their phones, so why should they be links? Why shouldn’t we just host them ourselves?

An internet intermediated by one middleman — an internet that has been effectively consolidated into a single private platform, or into a set of platforms owned by one company — is an internet on which the concept of a publisher is incoherent. At best publishers become euphemistic partners. Otherwise they are reduced to their brands — brands which will then contort to satisfy not just to an array of decentralized commercial requirements, as they always have, often embarrassingly, but to the demands, both explicit and structural, of a single self-interested entity.

It’s useful to imagine alternative histories because the real ones come so quickly. The internet’s earliest software middlemen had the advantage of being able to remove physical inefficiencies — brick and mortar stores, newspapers sent through the mail — and therefore did so deliberately. It was the elimination of an obvious inefficiency that was intended to win people over, and which sometimes did. Disintermediation looks a little different when it’s purely online. A newcomer’s advantage can be defined by ever-smaller differences in time and convenience, or purely by the presence of masses of people. Seamless, for example, can exchange a small and intoxicating convenience for an enormous amount of power; Facebook gets the news because it’s already there. Attention: it makes a strange product.

But the internet is young! It’s full of companies and systems that are, basically, accidents writ large. It’s only from within the web — the loose collection of websites that we think of as important — that its design and contortions seem familiar and right. Taken together, and viewed from the outside, websites, like print publications before them, compose an ugly but functional apparatus crudely organized around commerce and advertising. What they don’t have is the institutional ease and momentum that comes with centralization and size; the digital equivalent of the advantages enjoyed by, say, a Walmart. The other thing they don’t have is your friends.

That you should “go to where your readers are” is fast becoming conventional wisdom among publishers, which is always a sign to start thinking about something else. Google had a profound effect on how people read news on the internet, as well as how it was produced (never forget: Demand Media went public!). But its influence waned, just as Yahoo’s did, just as Aol’s did, just as MSN’s did. Here is a Times story from September 2000, worrying about the state of e-commerce: Are web portals like Yahoo losing sway to sites… with games?

A year ago, the portals were the top source of referrals for 54 percent of all e-commerce sites, the study found, compared with 46 percent of e-commerce sites today. The leading referral site, Yahoo, was the top source of traffic for 30 percent of e-commerce sites last July, but only 18 percent of Internet retailers this summer. The data for the survey was based on 809 sites tracked by Nielsen/NetRatings.

Sean Kaldor, vice president for e-commerce for Nielsen/NetRatings, said retailers were seeking out partnerships with types of Internet companies other than portals. Moreover, the study found a new category of sites is gaining influence in the referral arena: sweepstakes and lottery sites, like Iwon.com, Freelotto.com and Gamesville.com, in part because those sites offer partners an alternative business model.

According to the study, less than 1 percent of referral traffic to e-commerce sites came from sweepstakes or game sites in July 1999, but by July 2000, that figure was nearly 8 percent. Mr. Kaldor said these sites were gaining influence partly because they generally offered partners a ‘’pay per click’’ advertising model.

It’s 2000. Is the future of the commerce beholden to a few sites that exist primarily to test gambling law to its limits? Sure, why the hell not. (Also: “partners,” lol.)

Facebook’s dominance is unprecedented. It is large to the point that it can reasonably claim the ability to reintermediate the entire internet; in countries where the internet is less common, it is doing so in a much more assertive way. But a consequence of Facebook’s reintermediation is that it will create a visible new middleman to eliminate. Its success will illuminate a new structural inefficiency. Step back a few feet (or inches) and re-ask Facebook’s rhetorical questions: Why should people have to wait eight seconds to read an article? Why should they ever have to leave? Grant them, and then take the next logical step: Why should people have to sort through a Facebook feed to get their news?

To someone who makes software, or works at Facebook, this is not a shocking question. It’s a company that knows how quickly things change. Its sudden power over publishers and the news industry is the consequence of a long chain of events the unfolded in an industry that happened to be exploding: the invention of a better touchscreen phone; the widespread adoption of its basic design; the dominance, on mobile networks fast enough to serve the internet but still conspicuously slow, of manually refreshed text-based feeds; Facebook’s fortunate ownership of just such a feed primed with hundreds of millions of users; Facebook’s determined emphasis on getting its app onto phones after stubbornly resisting them long enough that its tardiness had become “a black eye” for the company. The situation web publishers find themselves in is a tertiary consequence of the success of a single company in a historic internet land rush. Facebook is spending billions of dollars to insert itself, preferably early, into the next such chain. Is the future of news celebrity gossip sites posted entirely on Instagram (which Facebook bought in 2012)? Is it people texting each other in apps? Is it… solitary virtual reality pleasure helmets? Again: sure.

The prospect of an internet functionally administrated by a single advertising company has awakened some dormant lobes among the people most responsible for the contours of the commercial internet. The venture capitalists are happily reaping their rewards from a thousands software toll booths, but are beginning to mumble about something bigger:

There’s this hopelessly geeky new technology. It’s too hard to understand and use. How could it ever break the mass market? Yet developers are excited, venture capital is pouring in, and industry players are taking note. Something big might be happening.

That is exactly how the Web looked back in 1994 — right before it exploded. Two decades later, it’s beginning to feel like we might be at a similar liminal moment. Our new contender for the Next Big Thing is the blockchain — the baffling yet alluring innovation that underlies the Bitcoin digital currency.

Encoded in all the confounding hype around Bitcoin, which was covered mostly for its fluctuations in price, is the idea that its public register of all transactions — the blockchain — would disintermediate the internet as we all know it. It’s the internet inoculated against the ills of the internet! It is to the commercial web born in the 90s what that web was to so many non-web businesses before it, if you believe the people who are already investing in it.

Does this mean the next internet, after the big centralization we’re experiencing now, will be less hostile to organizations that wish to exist for reasons beyond the claiming of space and attention? Maybe. But who knows when that will happen? It’s certainly not something a publication can afford to bet on now. What web publishing — and, more important, much of what we refer to as media — can bet on is a coming period of indeterminate length in which the concepts of innovation and compliance will mingle in disorienting ways.

In conclusion, haha, ashkjghasgauosghasugas;gashgk, who knows. Is it time to start talking about a public media for the internet? To start imagining structures within which at least nominally independent media can preserve itself? To do away with the conveniently defined “old ideas” altogether? For the last time: sure. But when wasn’t it?

The CONTENT WARS is an occasional column intended to keep a majority of CONTENT coverage in one easily avoidable place.

Self-organizing robot swarm gifs from this video published by Harvard University