Wikipedia And The Death Of The Expert

Wikipedia And The Death Of The Expert

“Learners are doers, not recipients.” — Walter J. Ong, “McLuhan as Teacher: The Future Is a Thing of the Past”

It’s high time people stopped kvetching about Wikipedia, which has long been the best encyclopedia available in English, and started figuring out what it portends instead. For one thing, Wikipedia is forcing us to confront the paradox inherent in the idea of learners as “doers, not recipients.” If learners are indeed doers and not recipients, from whom are they learning? From one another, it appears; same as it ever was.

It’s been over five years since the landmark study in Nature that showed “few differences in accuracy” between Wikipedia and the Encyclopedia Britannica. Though the honchos at Britannica threw a big hissy at the surprising results of that study, Nature stood by its methods and results, and a number of subsequent studies have confirmed its findings; so far as general accuracy of content is concerned, Wikipedia is comparable to conventionally compiled encyclopedias, including Britannica.

There were a few dust-ups in the wake of the Nature affair, notably Middlebury College history department’s banning of Wikipedia citations in student papers in 2007. The resulting debate turned out to be quite helpful as a number of librarians finally popped out of the woodwork to say hey, now wait one minute, no undergraduate paper should be citing any encyclopedia whatsoever, which, doy, and it ought to have been pointed out a lot sooner.

By 2009 the complaints had more or less faded away, and nowadays what you have is college librarians writing blog posts in which they continue to reiterate the blindingly obvious: “Wikipedia is an excellent tool for leading you to more information. It is a step along the way, and it is extremely valuable.”

Wikipedia’s Rough Riders

How come Wikipedia hasn’t turned into a giant glob of graffiti? It certainly would have by now, were it not for the multitude of volunteer sheriffs of the information highway who ride around patrolling the thing day and night.

There is a bogglingly complex and well-staffed system for dealing with errors and disputes on Wikipedia. There are special tools provided to volunteers for preventing vandalism, decreasing administrative workload and so on: rollbacker, autopatroller and the like. Then there are nearly two thousand administrators, who are empowered to “protect, delete and restore pages, move pages over redirects, hide and delete page revisions, and block other editors.”

Higher up the tree, there is MedCom, a committee of mediators, and then there are the arbitrators (just 16 of them, at this time) who handle more serious beefs. The bar for arbitrators is high. Potential candidates are limited to those who have made their bones by contributing many hundreds of hours of work. A look at the Wikipedia page detailing current requests for arbitration gives an idea of the kinds of disputes resolved by arbitrators and the methods through which they’re settled.

At the top of this loosely organized but large and passionate governing force is the Wikimedia Board of Trustees, currently a group of ten that includes Jimmy Wales, “Chairman Emeritus.” Three seats, including that of the current Chairman, Ting Chen, are held by community members — that is to say, interested individuals of no particular expertise outside their own deep and long-standing volunteer involvement, elected by “active members” of the Wikimedia community (an “active member” is someone who has made a certain number of edits to articles within a certain timeframe).

Other, parallel systems of control at Wikipedia have grown more robust as well, such as the informally organized “projects” like WikiProject: Medicine, in which anyone interested can help improve the quality of articles relating to medicine.

In short, there is a byzantine array of forces working for accuracy and against edit-warring, sock-puppetry and the like on Wikipedia. (Ira Matetsky, a Wikipedia arbitrator known on the site as Newyorkbrad, posted a long and fascinating account of Wikipedia’s administrative processes at The Volokh Conspiracy in May 2009, if you’re interested in more detail.)

It’s not perfect, of course, but neither is any other human-derived resource, including, as if it were necessary to say so, printed encyclopedias or books. It bears mentioning that if Wikipedia is a valuable resource, that is because a lot of people — untold thousands, in fact — are busting tail to make it that way.

Faster Encylopedia, Fill Fill

Wikipedia has three main advantages over its print ancestors:

1. Wikipedia offers far richer, more comprehensive citations to source materials and bibliographies on- and offline, thereby providing a far better entry point for serious study;

2. It is instantly responsive to new developments;

3. Most significantly, users can “look under the hood” of Wikipedia in order to investigate the controversial or doubtful aspects of any given subject. I refer to the magical “History” button that appears in the top right corner of any Wikipedia page. Click this, and you will have instantaneous access to everything that has ever been written on the Wikipedia page in question. (In rare cases, i.e., during an edit war, a Wikipedia administrator may remove material, but this almost never happens.) The course of long and intricately involved disputations may thus come instantly to light. Of course, a load of dimwitted yelling and general codswallop may also emerge, but let’s face it, the same thing happens with any given stack of books in the library, only in more formal, less convenient packaging.

Take the case of Daniel Jonah Goldhagen, the historian and author of Hitler’s Willing Executioners, which examined the complicity of ordinary Germans in the atrocities of the second war. Prof. Goldhagen’s Wikipedia page has been revised 607 times since its first publication in 2004. Someone pops in there every week or two and removes or adds his brickbats or accolades, often according to his own political leanings. (I’m a fan of this controversial author, despite disagreeing with him on a number of contemporary political issues.) The current page is spare, but it’s also entirely lacking in anything weird, rude or inaccurate. There’s no evidence of disputes requiring intervention by mediators or arbitrators, and reading all the edits gives a very good idea of what all the rumpus over the years has been about. History pages like this one, showing two different edits of the Goldhagen page, provide a clear illustration of the ongoing attempt to strike a fair balance of views. Goldhagen’s page also provides an excellent resource for further reading, including 47 references, ten links to the author’s articles and websites, and thirteen bibliographical entries.

It’s this third innovation that makes Wikipedia far more than just a portal to research, though indeed no ordinary encyclopedia, whether printed or online, can touch it for that. Rather than being just a tempest in the teacup of publishing, Wikipedia is the foreshock of an epistemological earthquake to rival the one set rumbling by Johannes Gutenberg ca. 1439.

Bob Stein, founder and co-director of the Institute for the Future of the Book (and co-founder, in 1984, of the Criterion Collection company) has been writing persuasively in this vein about Wikipedia for years now. I asked him recently to give an update on his views, and he said that if I wanted to understand the significance of Wikipedia, I should read Marshall McLuhan.

“Go back and study the shift in human communication, what McLuhan called ‘the shift to print,’” he said. “The place where an idea could be owned by a single person. One of McLuhan’s genius insights was his understanding of how the shift from an oral culture to one based on print gave rise to our modern notion of the individual as the originator and owner of particular ideas.”

According to McLuhan, Bob explained, “the ownership of an idea” was made inevitable by the invention of printing; it is this era that we are outgrowing, as McLuhan foresaw. “If the printing press empowered the individual, the digital world empowers collaboration.”

Straight Outta Cambridge

“The ruinous authority of experts […] was McLuhan’s lifelong theme.” — Philip Marchand, Marshall McLuhan: The Medium and the Messenger

McLuhan’s chief insights centered around the idea that technology strongly affects not only the content of culture, but the mind that creates and consumes that culture. He maintained that technology alters cognition itself, all the way down to its deepest, most elemental processes.

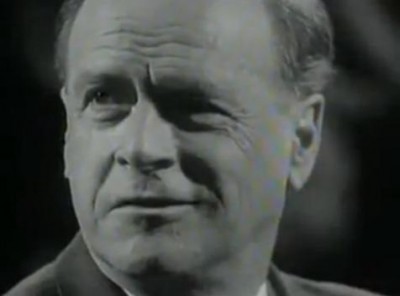

His 1962 The Gutenberg Galaxy is a difficult, disorderly, weirdly prescient and often dazzling book. Reading it is like riding on an old wooden rollercoaster that is threatening to blast apart at each turn; it isn’t organized into chapters and doesn’t make a linear argument; its insights throw off sparks in all directions. On the surface, The Gutenberg Galaxy is about the end of an evolutionary progress from print (“linear,” “authoritative”) to digital (“collaborative” “tribal”) ways of reasoning.

McLuhan prefigured the Internet era in a number of surprising ways. As he said in a March 1969 Playboy interview: “The computer thus holds out the promise of a technologically engendered state of universal understanding and unity, a state of absorption in the Logos that could knit mankind into one family and create a perpetuity of harmony and peace.”

McLuhan came of age at Cambridge, the cradle of modern literary criticism, in that groundbreaking moment when (a) the role of readers and (b) the world at large suddenly became matters of interest to literary scholars. As the New Critics would come to do in the U.S., the Cambridge gang sought the meaning of a literary work in the text itself, in its means of communicating its message to a reader.

Before these rationalists came on the scene, literary criticism had a mystical character rooted in the Romantic ideas of guys like Walter Pater, who viewed literary production and consumption both as occurring through the inspiration of an almost divine agency. (The phrase “purple prose” might have been invented for Pater, who was given to such turns of phrase as “to burn always with this hard, gem-like flame, to maintain this ecstasy, is success in life.”) Artists ranging from the Pre-Raphaelites to Oscar Wilde bought into this super-aestheticized model of understanding art and literature, but it was ill-attuned to the rationalist demands of a post-industrial society (though we aren’t yet quite free of this idea of the Muse striking us with the inspirational equivalent of Cupid’s dart; Harold Bloom, for example, is still forever blathering on about Pater.)

Modern criticism was also born out of frustration with the hidebound academics who appeared to believe that English literature had ended in the 17th century. F. R. Leavis, an influential critic who taught McLuhan at Cambridge, was among the first who dared to rank Pound and Eliot alongside Milton. The view of the scholarly establishment on both sides of the Atlantic had theretofore been that it would take you a lifetime simply to master the recondite joys of Milton; that was the true and real study of literature, and nothing written in our own lifetimes was ever going to count. It took some serious English-department renegades to alter those convictions. Studying under Leavis at Cambridge, McLuhan developed the beginnings of the lifelong distaste for “expertise” and “authority” that would come to characterize his work.

McLuhan took Leavis’s methods far beyond literature, though. Just as, in Leavis’s view, a poem imposed its own assumptions on the listener, created its own world, so too did every medium of communication force its own methods of connection into the human mind. The late David Lochhead, a Canadian theologian, did a lovely job of explaining McLuhan’s approach in 1994.

It is not only our material environment that is transformed by our machinery. We take our technology into the deepest recesses of our souls. Our view of reality, our structures of meaning, our sense of identity — all are touched and transformed by the technologies which we have allowed to mediate between ourselves and our world. We create machines in our own image and they, in turn, recreate us in theirs.[…]

Our machines allow us to reach out beyond the limits of our flesh. Our machines alter the ways in which our senses feed us information about the world beyond. […] Our machines offer us an image of ourselves — an image, which like the reflection of Narcissus, can hold us transfixed in self-adoration.

McLuhan drew from many, many sources in order to develop these ideas; the work of Canadian political economist and media theorist Harold Innis was instrumental for him. Innis’s technique, like McLuhan’s, forswears the building up of a convincing argument, or any attempt at “proof,” instead gathering in a ton of disparate ideas from different disciplines that might seem irreconcilable at first; yet considering them together results in a shifted perspective, and a cascade of new insights.

In the familiar, linear method of argument, it’s as if the author were a trial attorney and the reader a juror. By contrast, the McLuhan/Innis method is more like throwing the reader in a helicopter, taking him somewhere far away and simply exposing him to a vast new panorama. These authors wanted not to make and sell their own “point of view,” but to take you on a head trip instead.

As McLuhan writes in The Gutenberg Galaxy:

Innis sacrificed point of view and prestige to his sense of the urgent need for insight … When he interrelates the development of the steam press with “the consolidation of the vernaculars” and the rise of nationalism and revolution he is not reporting anybody’s point of view, least of all his own. He is setting up a mosaic configuration or galaxy for insight … Innis makes no effort to “spell out” the interrelations between the components in his galaxy. He offers no consumer packages in his later work, but only do-it-yourself kits, like a symbolist poet or an abstract painter.

All these elements — the abandonment of “point of view,” the willingness to consider the present with the same urgency as the past, the borrowing “of wit or wisdom from any man who is capable of lending us either,” the desire to understand the mechanisms by which we are made to understand — are cornerstones of intellectual innovation in the Internet age. In particular, the liberation from “authorship” (brought about by the emergence of a “hive mind”) is starting to have immediate implications that few beside McLuhan foresaw. His work represents a synthesis of the main precepts of New Criticism with what we have come to call cultural criticism and/or media theory.

How neatly does this dovetail into a subtle and surprising new appreciation of the communal knowledge-making at Wikipedia?! It’s no wonder that McLuhan is among the patron saints of the Internet.

It’s no accident, either, that from grad school onward McLuhan was involved in collaborative projects that drew in a wide variety of disciplines, institutions, students, and paths of inquiry. If the results were chaotic (and they often were) they were also vital and thrilling. He worked with educators, corporate executives, computer scientists and management theorists; he helped develop high-school media syllabi, designed a study relating dyslexia to television watching, and conducted sensory tests for IBM. (For more on McLuhan, I can highly recommend Philip Marchand’s fine biography, The Medium and the Messenger.)

McLuhan’s insights, though they are being lived by millions every day, will take a long time to become fully manifest. But it’s already clear that Wikipedia, along with other crowd-sourced resources, is wreaking a certain amount of McLuhanesque havoc on conventional notions of “authority,” “authorship,” and even “knowledge.”

The Internet Is Making Us Maoist

Though librarians and the academy in general have more or less fallen into line, there is still considerable critical opposition to the spread and influence of Wikipedia and of the Internet in general as a cultural and intellectual force.

In an influential 2006 piece at Edge, “Digital Maoism: The Hazards of the New Online Collectivism” Jaron Lanier wrote that “the hive mind is for the most part stupid and boring” and pronounced the concept of an all-wise collective not only faddish, but wrong and dangerous. He expressed a conservative contempt for “the collective” (by which he more or less means, “the mob”) and a staunch faith in the validity and significance of “authorship” and “individuality.”

From the same essay: “The beauty of the Internet is that it connects people. The value is in the other people. If we start to believe that the Internet itself is an entity that has something to say, we’re devaluing those people and making ourselves into idiots.” Well okay. I guess if we started to believe that the Internet itself were writing Wikipedia we would be in some trouble, or maybe we would be Rod Serling, I don’t know.

A lot of things have changed since 2006, but Mr. Lanier’s mind is not among them. Seriously, reading his stuff is like watching a guy lose his shirt at the roulette wheel and still he keeps on grimly putting everything on the same number. Lanier’s reasoning is right next door to that of Nicholas Carr (The Internet Is Making Us Stupid), Evgeny Morozov (The Internet Is Worse Than Useless Politically), Malcolm Gladwell (oh, don’t even get me started) and Sherry Turkle (The Internet Is Making Us Ever So Lonely.)

So in his 2010 book, You Are Not a Gadget: A Manifesto Lanier kept up the attack on Wikipedia and other forms of crowdsourcing. From a 2010 interview:

On one level, the Internet has become anti-intellectual because Web 2.0 collectivism has killed the individual voice. It is increasingly disheartening to write about any topic in depth these days, because people will only read what the first link from a search engine directs them to, and that will typically be the collective expression of the Wikipedia.

Stop saying “the Wikipedia”! Anyhoo. it’s difficult to see how Lanier, Carr et al. will be able to keep this sort of thing up for much longer. Michael Agger took Lanier’s book to ribbons in Slate: “[Lanier’s] critique is ultimately just a particular brand of snobbery. [He] is a Romantic snob. He believes in individual genius and creativity, whether it’s Steve Jobs driving a company to create the iPhone or a girl in a basement composing a song on an unusual musical instrument.”

But how come we’re still even discussing this, when Bob Stein already made mincemeat (very kindly, but mincemeat) of “Digital Maoism” right when it came out, in 2006? And how come the crux of Stein’s observations went pretty much unnoticed?

In a traditional encyclopedia, experts write articles that are permanently encased in authoritative editions. The writing and editing goes on behind the scenes, effectively hiding the process that produces the published article. […] Jaron focuses on the “finished piece,” ie. the latest version of a Wikipedia article. In fact what is most illuminative is the back-and-forth that occurs between a topic’s many author/editors. I think there is a lot to be learned by studying the points of dissent. […]

At its core, Jaron’s piece defends the traditional role of the independent author, particularly the hierarchy that renders readers as passive recipients of an author’s wisdom. Jaron is fundamentally resistant to the new emerging sense of the author as moderator — someone able to marshal “the wisdom of the network.”

There has been some comment as to how this model of understanding actually works, but we need a lot more. The alteration in the way we think of authorship is deeper and more subtle than has yet been widely discussed. As Stein said to me recently, “The truth of a discipline, idea or episode in history lies in these interstices,” he said. “If you want to understand something complicated it’s helpful to look at the back and forth of competing voices or views.”

Events have long ago overtaken the small matter of “the independent author.” The question that counts now is: the line between author and reader is blurring, whether we like it or not. How can we use that incontrovertible fact to all our benefit?

The End Of Truth

There’s an enormous difference between understanding something and deciding something. Only in the latter case must options be weighed, and one chosen. Wikipedia is like a laboratory for this new way of public reasoning for the purpose of understanding, an extended polylogue embracing every reader in an ever-larger, never-ending dialectic. Rather than being handed an “authoritative” decision, you’re given the means for rolling your own.

We can call this new way of looking at things post-linear or even “post-fact” as Clay Shirky put it in a recent and thrill-packed interview with me. (This was a wicked nod to Farhad Manjoo, whose book True Enough: Learning to Live in a Post-Fact Society is dead against the idea of being “post-fact.”) Shirky is himself a somewhat McLuhan-esque figure, a rapid-fire talker whose conversation is like a lit-up pinball machine with insights all caroming against one another.

“Those who are wringing their hands over Wikipedia are those committed to the idea of some uncomplicated ‘truth” he said, going on to characterize the early controversy between Britannica and Wikipedia as “an anguish regarding authority … that there are no guarantees to truth.”

He continued: “The threat to Britannica from Wikipedia is not a matter of dueling methods of providing information. Wikipedia, if it works better than Britannica, threatens not only its authority as a source of information, but also the theory of knowledge on which Britannica is founded. On Wikipedia “the author” is distributed, and this fact is indigestible to current models of thinking.

“Wikipedia is forcing people to accept the stone-cold bummer that knowledge is produced and constructed by argument rather than by divine inspiration.”

He went on to illustrate with the example of alchemy.

“Alchemists kept their practices shrouded in secrecy. The shift from alchemy to chemistry wasn’t in the practitioners, nor in the instruments they used. The difference was that chemists had become willing to expose their methods and conclusions to the withering scrutiny of their peers,” he said. “The chemists said, ‘We don’t want to believe what isn’t true,’ and then: ‘It’s not true until someone else checks your work.’ This doubting impulse led to things like peer review, the duplication of experiments, the foundation of modern science.”

By empowering readers and observers with transparent access to the means by which conclusions are reached, rather than assembling them in an audience to hear the Authorities deliver the catechism from on high, we are all of us becoming scientists in this way, entering into a democracy of the intellect that is already bearing spectacular fruit, not just at Wikipedia but through any number of collaborative projects, from the Gutenberg Project to Tor to Linux.

But there continues to be resistance to the idea that expertise itself has been called into question, and we can expect that resistance to continue. Experts, understandably, are apt to be annoyed by their devaluation, and are liable to make their displeasure felt. And the thing about experts is that a lot of people still feel disinclined to question them.

Experts, geniuses, authorities, “authors” — we were taught to believe that these should be questioned, but until now have not often been given a way to do so, to seek out and test for ourselves the exact means by which they reached their conclusions. So long as we believe that there is such a thing as an expert rather than a fellow-investigator, then that person’s views just by magic will be worth more than our own, no matter how much or how often actual events have shown this not to be the case. For us to have this magic thinking about “individualism” then is pernicious politically, intellectually, in every way. That is not to say that we don’t value those who can lead the conversation. We’ll need them more and more, those “who are able to marshal the wisdom of the network,” to use Bob Stein’s words. But they might be more like DJs, assembling new ways of looking at things from a huge variety of elements, than like than judges whose processes are secret, and whose opinions are sacred.

And there’s so much more to this. If my point of view needn’t immediately eradicate yours — if we are having not a contest but an ongoing comparison, whether in politics, art or literary criticism, if “knowledge” is and will remain provisional (and we could put a huge shout-out to Rorty here, if we had the space and the breath) what would this mean to the quality of our discourse, or to the subsequent character and quality of “understanding”?

Maybe disagreement doesn’t have to be a battle to be fought to the death; it can be embraced, even savored. Wikipedia as it is now constituted lends enormous force to this argument. The ability to weigh conflicting opinions dispassionately and without requiring a “decision” is invaluable in understanding almost any serious question. That much is clear right now. There are many, many practical political, pedagogical and epistemological benefits yet to be investigated.

“Learning” no longer means sitting passively in a lecture hall or on in front of a television or in a library and waiting to receive the “authoritative” version of what the experts think is up as if it were a Communion wafer. For nearly 20 years we have had the Internet, now grown into a medium of almost infinite paths, where “learning” means that you can Twitter directly to people in Egypt to ask them what they really think about ElBaradei (and get answers), ask an author or critic to address a point you feel he may have missed (ditto), or share your own insights in countless forums where they will be read and admired (and/or savaged.) Knowledge is growing more broadly and immediately participatory and collaborative by the moment.

The results of these collaborations, like Wikipedia, represent not just new methods of packaging knowledge, but a new vision of what might come to be meant by “knowledge”: something more like what Marshall McLuhan called “a galaxy for insight.”

“The sadness of our age is characterized by the shackles of individualism,” Bob Stein said. But are we throwing off those shackles, even as we speak?

Maria Bustillos is the author of Dorkismo and Act Like A Gentleman, Think Like A Woman.

Timex advertisement via Boing Boing. Photo of Chen and Wales by Beatrice Murch; alchemist at work print from C.J.S. Thomson; both via Wikimedia Commons.